Difference between revisions of "Ecosystem Approach Community of Practice: Validation"

(→December 2013 Validation Round) |

(→December 2013 Validation Round) |

||

| (13 intermediate revisions by the same user not shown) | |||

| Line 108: | Line 108: | ||

During the reporting period (M11 - M28) the following validation activities were reported: | During the reporting period (M11 - M28) the following validation activities were reported: | ||

* Complete validation of EA-CoP accessible sections of FishFinderVRE, BiOnym, VME-DB, and | * Complete validation of EA-CoP accessible sections of FishFinderVRE, BiOnym, VME-DB, and | ||

| − | * Partial validation on selected sections of existing infrastructure components was performed for . | + | * Partial validation on selected sections of existing infrastructure components was performed for TunaAtlas and VesselActivityAnalyzer. |

| − | * Validation of components intended to be integrated in the e-Infrastructure, but not yet ready for integration was performed for Cotrix, SPREAD, . | + | * Validation of components intended to be integrated in the e-Infrastructure, but not yet ready for integration was performed for Cotrix, SPREAD, and TLO related services. |

* Validation has yet to commence for NEAFC, CRIA and some IRD activities developed in their respective systems. | * Validation has yet to commence for NEAFC, CRIA and some IRD activities developed in their respective systems. | ||

| Line 121: | Line 121: | ||

The table below contains links to detailed wiki-pages with the validation results. Some highlight of the validation activities: | The table below contains links to detailed wiki-pages with the validation results. Some highlight of the validation activities: | ||

| − | During the reporting period (M29 - | + | During the reporting period (M29 - M33) the following validation activities were reported: |

* Continued monitoring of delivered VRE's, such as AquaMaps, FishFinderVRE, Trendylyzer, BiodiversityLab, Vessel Activities Analyzer, Scalable Data Mining and EcologicalModeling. Here no validation reports were needed, but the exploitation report and / or products (e.g. publications) evidence the completeness of previous validation rounds. | * Continued monitoring of delivered VRE's, such as AquaMaps, FishFinderVRE, Trendylyzer, BiodiversityLab, Vessel Activities Analyzer, Scalable Data Mining and EcologicalModeling. Here no validation reports were needed, but the exploitation report and / or products (e.g. publications) evidence the completeness of previous validation rounds. | ||

* Complete validation of EA-CoP accessible sections of BiOnym, VME-DB, Cotrix, and NEAFC | * Complete validation of EA-CoP accessible sections of BiOnym, VME-DB, Cotrix, and NEAFC | ||

| Line 128: | Line 128: | ||

* Validation has yet to commence for NEAFC, CRIA and some IRD activities developed in their respective systems. | * Validation has yet to commence for NEAFC, CRIA and some IRD activities developed in their respective systems. | ||

* Validation reports were not produced for derivative products based on existing VRE's , such as iMarineBoardVRE, | * Validation reports were not produced for derivative products based on existing VRE's , such as iMarineBoardVRE, | ||

| − | * validation reports for derivative products that add functionality to existing products, such as new algorithms for the statistical manager were produced where a use case was implemented through an experiment (see the [[Experiments]] page for the list.) | + | * validation reports for derivative products that add functionality to existing products, such as new algorithms for the statistical manager were produced where a use case was implemented through an experiment (see the [[Ecosystem_Approach_Community_of_Practice:_Experiments | Experiments]] page for the list.) |

A new element in the validation is the assessment of non-technical features made available through the infrastructure, such as the quality and coverage of data access and sharing policies, the value of generic e-infrastructure services such as the Workspace and the Social Tools, and the value of contributing to and becoming part of an ecosystem of topical expertise. The value here is difficult to validate, and rests on anecdotal observations. | A new element in the validation is the assessment of non-technical features made available through the infrastructure, such as the quality and coverage of data access and sharing policies, the value of generic e-infrastructure services such as the Workspace and the Social Tools, and the value of contributing to and becoming part of an ecosystem of topical expertise. The value here is difficult to validate, and rests on anecdotal observations. | ||

| Line 157: | Line 157: | ||

|ICIS | |ICIS | ||

|[[Code List Management]] | |[[Code List Management]] | ||

| − | | | + | |08.14 |

|[http://goo.gl/Z2drvO TA validation] | |[http://goo.gl/Z2drvO TA validation] | ||

|Validated | |Validated | ||

| Line 163: | Line 163: | ||

|ICIS | |ICIS | ||

|[[SPREAD P1]] | |[[SPREAD P1]] | ||

| − | | | + | |07.13 |

| | | | ||

| − | | | + | |Validated |

|- | |- | ||

|ICIS | |ICIS | ||

| Line 176: | Line 176: | ||

|[[Statistical analysis with R]] | |[[Statistical analysis with R]] | ||

|08.12 | |08.12 | ||

| + | | | ||

| + | |Validated | ||

| + | |- | ||

| + | |ICIS | ||

| + | |[[VTI]] | ||

| + | |04.12 | ||

| + | |Detail report | ||

| + | |Validated | ||

| + | |- | ||

| + | |ICIS - Cotrix | ||

| + | |[[Cotrix]] | ||

| + | |08.14 | ||

| + | | | ||

| + | |Validated | ||

| + | |- | ||

| + | |TabularDataLab - tuna Atlas | ||

| + | |[[TabularDataLab]] | ||

| + | |08.14 | ||

| | | | ||

|Validated | |Validated | ||

| Line 187: | Line 205: | ||

|Reporting | |Reporting | ||

|[[VME DB]] | |[[VME DB]] | ||

| − | | | + | |07.14 |

| | | | ||

| − | | | + | |Validated |

|- | |- | ||

|Reporting | |Reporting | ||

| Line 211: | Line 229: | ||

|BiodiversityResearchEnvronment | |BiodiversityResearchEnvronment | ||

|[[BiodiversityResearchEnvironment]] | |[[BiodiversityResearchEnvironment]] | ||

| − | | | + | |05.14 |

| | | | ||

| − | | | + | |Validated |

| + | |- | ||

| + | |BiodiversityLab | ||

| + | |[[BiodiversityLab]] | ||

| + | |09.14 | ||

| + | | | ||

| + | |Validated | ||

|- | |- | ||

|[[Ecological Modeling]] | |[[Ecological Modeling]] | ||

| Line 223: | Line 247: | ||

|ScalableDataMining | |ScalableDataMining | ||

|[[ScalableDataMining | Overview ]] | |[[ScalableDataMining | Overview ]] | ||

| − | | | + | |07.14 |

| | | | ||

|Not started | |Not started | ||

|- | |- | ||

|Trendylyzer | |Trendylyzer | ||

| − | |[[ | + | |[[Trendylyzer | Trendylyzer ]] |

|01.14 | |01.14 | ||

| | | | ||

| − | | | + | |Validated |

|- | |- | ||

|BiOnym | |BiOnym | ||

| − | |[[ | + | |[[Bionym | Overview ]] |

| − | | | + | |08.14 |

| | | | ||

| − | | | + | |Validated |

|- | |- | ||

|VME-DB | |VME-DB | ||

| − | |[[ | + | |[[VME-DB | Overview ]] |

| − | | | + | |07.14 |

| | | | ||

| − | | | + | |Validated |

|- | |- | ||

|Cotrix | |Cotrix | ||

| − | |[[ | + | |[[COTRIX | Overview ]] |

| − | | | + | |07.14 |

| | | | ||

| − | | | + | |Validated |

|- | |- | ||

|SPREAD | |SPREAD | ||

| − | |[[ | + | |[[Spread| Overview ]] |

|02.14 | |02.14 | ||

| | | | ||

| − | | | + | |Validated |

| + | |- | ||

| + | |Chimaera | ||

| + | |[[Chimaera| Overview ]] | ||

| + | |07.14 | ||

| + | | | ||

| + | |Validated | ||

|} | |} | ||

Latest revision as of 15:09, 10 November 2014

Goal and Overview

According to the Description of Work, the conception and validation of concrete applications serving the business cases is part of the WP3 activity. The goal of the validation activity is to

- document status and issues on harmonization approaches, and

- validate implemented policies and business cases, their functionality and conformance to requirements.

The approach taken in iMarine builds on the experience gained in the validation efforts of the preceding D4ScienceAn e-Infrastructure operated by the D4Science.org initiative.-II project, where the FURPS model was adopted to generate templates against which to 'benchmark' the performance of a component.

FURPS is an acronym representing a model for classifying software quality attributes (functional and non-functional requirements):

- Functionality - Feature set, Capabilities, Generality, Security

- Usability - Human factors, Aesthetics, Consistency, Documentation

- Reliability - Frequency/severity of failure, Recoverability, Predictability, Accuracy, Mean time to failure

- Performance - Speed, Efficiency, Throughput, Response time

- Supportability - Testability, Extensibility, Adaptability, Maintainability, Compatibility, Serviceability, Localizability

The validation in iMarine, where the commitments of the EA-CoPCommunity of Practice. reaches further then in the previous project, validation will be done on requirements and specifications produced by the EA-CoPCommunity of Practice., where these can be aligned with iMarine Board Work Plan objectives.

This implies that the validation is not equivalent to testing individual component behavior and performance in the e-InfrastructureAn operational combination of digital technologies (hardware and software), resources (data and services), communications (protocols, access rights and networks), and the people and organizational structures needed to support research efforts and collaboration in the large., but assesses to progress towards achieving a Board objective. This gives the validation an important role in one of the Board main activities priority setting to improve the sustainability of the e-InfrastructureAn operational combination of digital technologies (hardware and software), resources (data and services), communications (protocols, access rights and networks), and the people and organizational structures needed to support research efforts and collaboration in the large..

Validation thus focuses on project outputs, and does not intend to influence the underlying software architecture, development models, software paradigms or code base.

A validation is triggered by the release of a software component, part thereof, or data source that is accessible by project partners through a GUI in the e-InfrastructureAn operational combination of digital technologies (hardware and software), resources (data and services), communications (protocols, access rights and networks), and the people and organizational structures needed to support research efforts and collaboration in the large..

Procedure(s) and Supporting tools

The validation procedure

Once a VREVirtual Research Environment. is released, those Board Members that have expressed their interest in the component (through the Board Work Plan) are alerted by the WP3 Leader, and the validation commences with the compilation of a set of questions, based on the above FURPS criteria.

The feed-back is collected by the WP3 Leader, discussed with the technical Director or other project representatives, and, when necessary, enhancement tickets are produced, with a clear reference to the released component.

If the released VREVirtual Research Environment. is validated the first time, the Round 1 results are discussed with the validators and the EA-CoPCommunity of Practice. representatives they represent. If needed, the iMarine project is asked to add or edit modifications to the delivered functionality. If these modifications exceed the level of enhancement tickets, a Round 2 validation is foreseen, which can be followed by other rounds until a satisfactory level of completeness has been achieved.

This Validation approach is perfectly in line with the application development methodology that privileges the Evolutionary approach. With this approach a very robust but not functional-complete application is released to the validators and then constantly refined by adding new features and by assessing the validators’ comments and feedback. This evolutionary approach acknowledges that all the requirements are neither always completely defined by the community nor completely understood by the technological providers and therefore builds only those that are well understood and mature. This approach allows the technological providers to add features, to accommodate new requests, or to make changes that couldn't be conceived during the initial requirements phase by accepting the fact that an innovative VREVirtual Research Environment. must evolve through use in its intended operational environment.

The reporting on the wiki and in other tools

Validation results will also be documented in this wiki, which will contain the name of the component validated, the dates of validation, the results. Tickets will refer to the component name, the page where to validator comment originated, a descriptive text, and links to relevant documentation on the iMarine Board work plan and the validation wiki page.

In addition, when validation results in an error, specific 'Issue tickets' can be entered in the project TRAC system. If a validator encouters a functionality, usability, or performance issue, specific 'enhancement tickets' can be produced in liaison with the WP3 Leader. Supportability issues are usually discussed in other fora.

Validation Results: Overall Summary

November 2012 Validation Round

In one sentence, the September 2012 status of the validation reveals that the delivered Virtual Research Environments meet the expectations.

As expected, the bulk of the work in Period 1 was towards establishing data access and discovery functionality, with less emphasis on user facing VREVirtual Research Environment. components. Hence, the validation in P1 was focused on those VREVirtual Research Environment.'s that already could be equipped with data access components.

The VREVirtual Research Environment.'s build on a complete e-infrastructure, which is not subject to EA-CoPCommunity of Practice. validation. The entire validation effort never revealed any issues related to the architecture, design, or operation of the underlying components. These components e.g. service the data streams, storage, user access and management, security etc. These functionalities are not validated, but since no issues were reported, they can be considered to have received silent approval of the EA-CoPCommunity of Practice.. Some comments from the validation are worth mentioning here:

- The improvements to the work-space are impressive, and allow for much better collaboration on data and results;

- The messaging system vastly reduces the effort to share large dataset, or simple messages;

- The integration option with the desktop was appreciated;

- The gCubeApps concept was considered an important step to attract 'light' users (data consumers);

- The live access and search of the biodiversity data was considered very useful, and was recommended to be made available at generic level.

iMarine technologies serve a plethora of use-cases, and VREs may be re-used across business cases. The validation efforts recognize that the target often has to be a subset of the expected BC, and that a validation is not an atomic or isolated effort.

The validation efforts were spread out over the entire Period 1, and are continuing as per today. As per 20 November 2012, the following VREs functionality was validated successfully:

- ICIS Data import, curation and storage;

- ICIS TS manipulation, graphing, and use in R;

- ICIS TS tot GIS, Mapviewer;

- AquaMaps Map Generation and data manipulation;

- AquaMaps GIS Viewer and geospatial product facilities;

- FCPPS and VME-DB Reporting tools;

- FCPPS and VME-DB Documents work-flow;

- VTI Capture aggregator.

June 2013 Validation Round

In summary, validation efforts between November 2012 and June 2013 revealed that all delivered Virtual Research Environments and related tools meet the expectations.

The validation during P2 did not require the engagement of EA-CoPCommunity of Practice. representatives, for a number of reasons:

- The released VREVirtual Research Environment.'s in the period were strongly based on re-purposed existing VREVirtual Research Environment.'s, which do not require a separate validation effort.

- The development efforts resulting from earlier validation rounds resulted in serious development efforts that were not completed before June 2013. Validation is related to entire EA-CoPCommunity of Practice. VREVirtual Research Environment.'s or similar large components. It is NOT testing.

- The EA-CoPCommunity of Practice. was equipped with several VREVirtual Research Environment.'s or similar functionality, but could not organize itself to perform a complete validation. E.g. FishFinderVRE was released, but the project encountered delay.

- In P2, the notion of products was enforced, also through reviewers recommendation, and effort was invested in defining and designing the functionality to enable the offer of iMarine services through bundles, rather than rather incidental VREVirtual Research Environment.-products. This will position iMarine and D4ScienceAn e-Infrastructure operated by the D4Science.org initiative. better in the future, although the delivery and validation of some VREVirtual Research Environment.'s suffered.

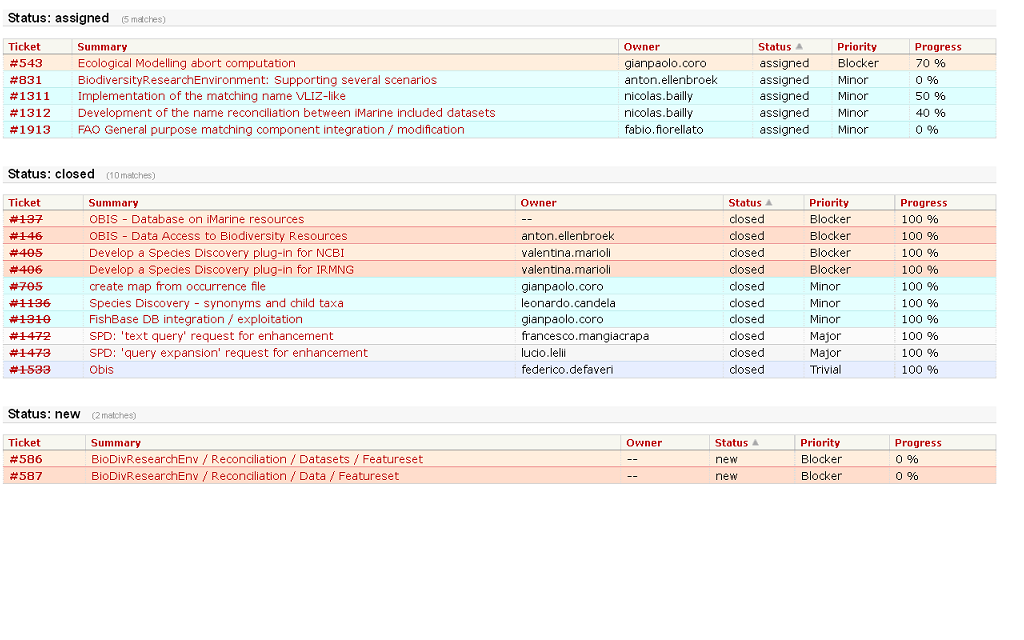

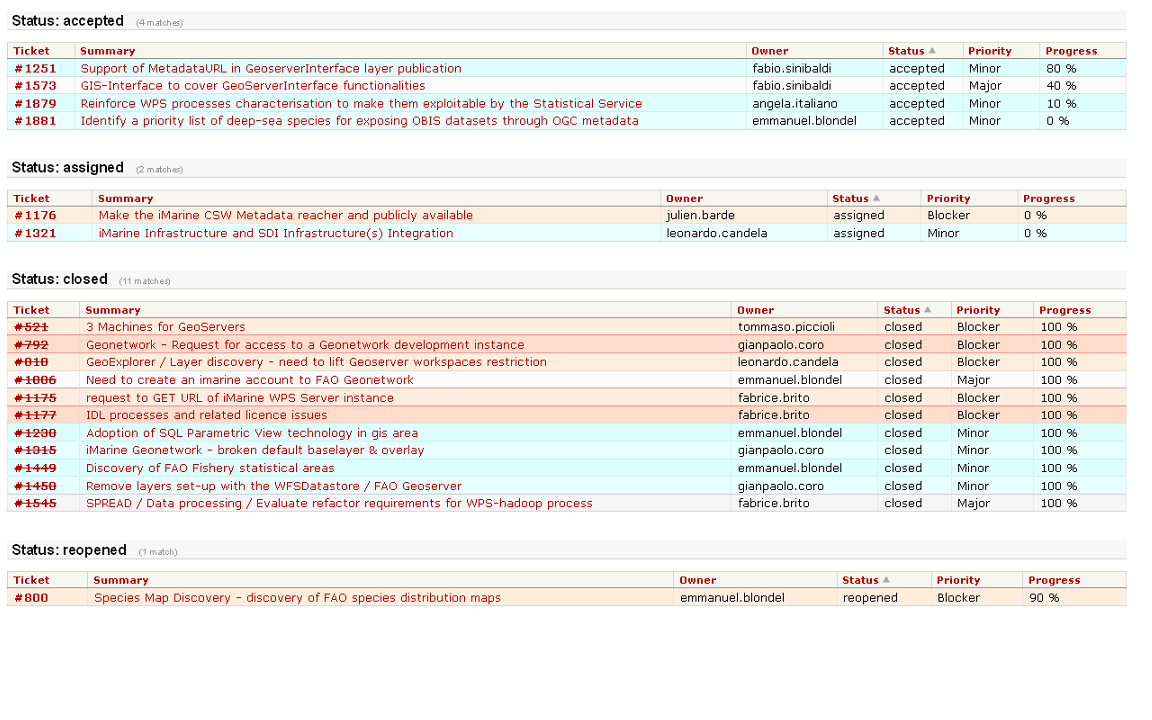

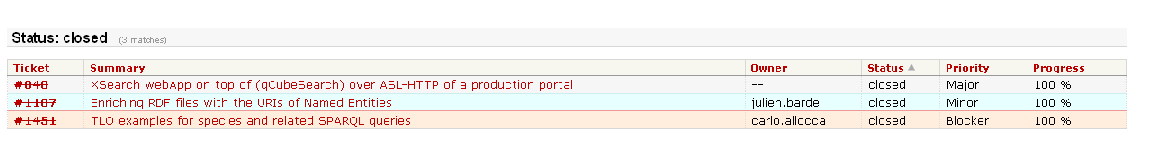

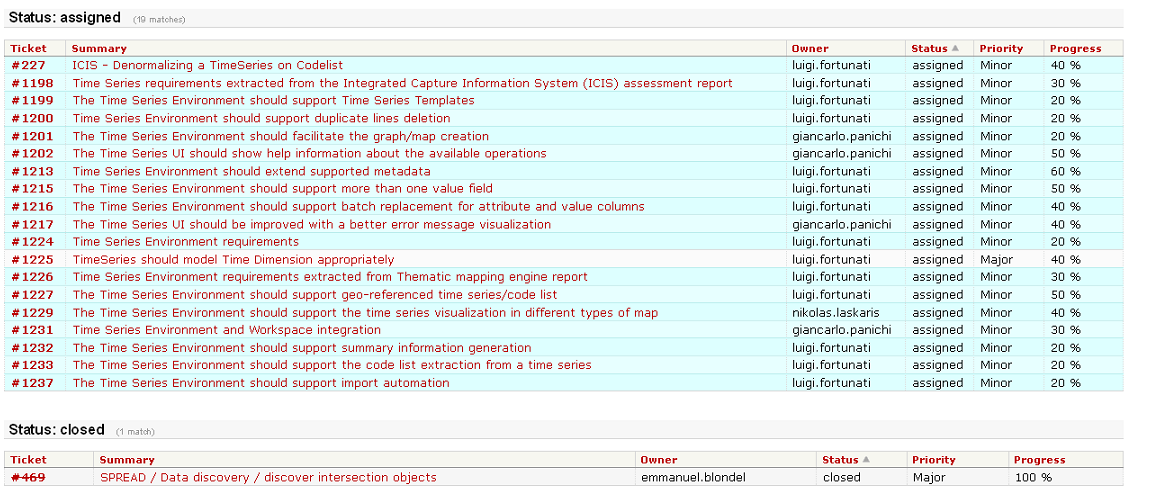

In P2, rather than having validation with EA-CoPCommunity of Practice., the key activities were recorded in the TRAC system. TRAC, a software development monitoring tool, focuses on development tasks, and is not suited to convey progress or impact indicators. TRAC can however be used to visualize key tickets to evidence development objectives. It is important to note the difference between the high-level key tickets reported, and the cascade of minor tickets these can result in to implement or correct specific functionality. The following screen-shots of such reports can thus be considered proxies for validation effort, as they were prepared in close consultation with WP3 and EA-CoPCommunity of Practice. representatives after validation activities.

The following TRAC reports are available and guide the development effort in the four cluster towards their materialization in StatsCube, BiolCube, ConnectCube and GeosCube:

December 2013 Validation Round

In summary, validation efforts between June 2013 and December 2013 revealed that all delivered Virtual Research Environments and related tools meet the expectations.

The release of several key components in the period of December 2013 - February 2014 was synchronized with this validation effort. This caused some delay in producing the validation reports in draft, but this delay was recovered by shortening the reporting work-flow. Thus, the deadline for Deliverable D3.5 (M28 - February 2014) could be met, yet some of the detailed validation reports will be made available when MS20 - Validation reports (M29) is produced in March 2014.

The detailed wiki-pages below contain the validation results. Some highlight of the validation activities:

During the reporting period (M11 - M28) the following validation activities were reported:

- Complete validation of EA-CoPCommunity of Practice. accessible sections of FishFinderVRE, BiOnym, VME-DB, and

- Partial validation on selected sections of existing infrastructure components was performed for TunaAtlas and VesselActivityAnalyzer.

- Validation of components intended to be integrated in the e-InfrastructureAn operational combination of digital technologies (hardware and software), resources (data and services), communications (protocols, access rights and networks), and the people and organizational structures needed to support research efforts and collaboration in the large., but not yet ready for integration was performed for Cotrix, SPREAD, and TLO related services.

- Validation has yet to commence for NEAFC, CRIA and some IRD activities developed in their respective systems.

A new element in the validation is the assessment of non-technical features made available through the infrastructure, such as the quality and coverage of data access and sharing policies, the value of generic e-infrastructure services such as the Workspace and the Social Tools, and the value of contributing to and becoming part of an ecosystem of topical expertise. The value here is difficult to validate, and rests on anecdotal observations.

July/August 2014 Validation Round

The validation efforts between July 2014 and August 2014 expanded beyond the delivered Virtual Research Environments and included also newly emerging exploitation activities based on previously released services and tools.

The release of several key components in the period of December 2013 - February 2014 was synchronized with this validation effort. This caused some delay in producing the validation reports in draft, but this delay was recovered by shortening the reporting work-flow. Thus, the deadline for Deliverable D3.5 (M28 - February 2014) could be met, yet some of the detailed validation reports will be made available when MS20 - Validation reports (M29) is produced in March 2014.

The table below contains links to detailed wiki-pages with the validation results. Some highlight of the validation activities:

During the reporting period (M29 - M33) the following validation activities were reported:

- Continued monitoring of delivered VREVirtual Research Environment.'s, such as AquaMaps, FishFinderVRE, Trendylyzer, BiodiversityLab, Vessel Activities Analyzer, Scalable Data Mining and EcologicalModeling. Here no validation reports were needed, but the exploitation report and / or products (e.g. publications) evidence the completeness of previous validation rounds.

- Complete validation of EA-CoPCommunity of Practice. accessible sections of BiOnym, VME-DB, Cotrix, and NEAFC

- Partial validation on selected sections of existing infrastructure components was performed for Cotrix, TabMan, .

- Validation of components intended to be integrated in the e-InfrastructureAn operational combination of digital technologies (hardware and software), resources (data and services), communications (protocols, access rights and networks), and the people and organizational structures needed to support research efforts and collaboration in the large., but not yet ready for integration was performed for , , .

- Validation has yet to commence for NEAFC, CRIA and some IRD activities developed in their respective systems.

- Validation reports were not produced for derivative products based on existing VREVirtual Research Environment.'s , such as iMarineBoardVRE,

- validation reports for derivative products that add functionality to existing products, such as new algorithms for the statistical manager were produced where a use case was implemented through an experiment (see the Experiments page for the list.)

A new element in the validation is the assessment of non-technical features made available through the infrastructure, such as the quality and coverage of data access and sharing policies, the value of generic e-infrastructure services such as the Workspace and the Social Tools, and the value of contributing to and becoming part of an ecosystem of topical expertise. The value here is difficult to validate, and rests on anecdotal observations.

Validation Results: Detailed Reports

The rich heterogeneity of the e-infrastructure and the re-use and orthogonality of many components implies that validation need not always be an exhaustive exercise for each VREVirtual Research Environment.; a component validated in one VREVirtual Research Environment. will not have to be validated when re-used in another. A validation report on e.g. VTI can only be understood if one has practical knowledge of the ICIS VREVirtual Research Environment..

This is reflected in the structure of the validation report that can seem skewed in (dis)favor of the earlier components, where validation resulted in effective and structural improvement to the benefit of other VREVirtual Research Environment.'s. The evolving e-ecosystem around ICIS, FCPPS, and AquaMaps are validated from these 3 key VREVirtual Research Environment.'s as starting point.

Validation Log

| VREVirtual Research Environment. Name | VREVirtual Research Environment. Component Summary Page | Last activity | Detail report | Status |

| ICIS | Curation | 09.12 | Validated | |

| ICIS | Code List Management | 08.14 | TA validation | Validated |

| ICIS | SPREAD P1 | 07.13 | Validated | |

| ICIS | VTI | 04.12 | Detail report | Validated |

| ICIS | Statistical analysis with R | 08.12 | Validated | |

| ICIS | VTI | 04.12 | Detail report | Validated |

| ICIS - Cotrix | Cotrix | 08.14 | Validated | |

| TabularDataLab - tuna Atlas | TabularDataLab | 08.14 | Validated | |

| Reporting | FCPPS | 02.12 | Validated | |

| Reporting | VME DB | 07.14 | Validated | |

| Reporting | FishFinderVRE | 01.13 | Validated | |

| AquaMaps | AquaMaps Data management | 10.12 | Validated | |

| AquaMaps | AquaMaps Map generation | 10.12 | Validated | |

| BiodiversityResearchEnvronment | BiodiversityResearchEnvironment | 05.14 | Validated | |

| BiodiversityLab | BiodiversityLab | 09.14 | Validated | |

| Ecological Modeling | Overview | 12.12 | Not started | |

| ScalableDataMining | Overview | 07.14 | Not started | |

| Trendylyzer | Trendylyzer | 01.14 | Validated | |

| BiOnym | Overview | 08.14 | Validated | |

| VME-DB | Overview | 07.14 | Validated | |

| Cotrix | Overview | 07.14 | Validated | |

| SPREAD | Overview | 02.14 | Validated | |

| Chimaera | Overview | 07.14 | Validated |

EA-CoPCommunity of Practice. Training

The training of the EA-CoPCommunity of Practice. is related to the validation activity. In the validation effort, key representatives of the EA-CoPCommunity of Practice. participate to a review of one or more components. Their feed-back is essential to define the level and detail required in the training materials. Especially the feed-back on Usability is also required for the development team to a. Implement improvements to code, b. improve messages to users, c. improve the communication style of the applications, and d. improve on-line help.

Therefore, only after the validation effort, a description of the training materials, the training events, and trainers can be made.

The heterogeneity of the e-infrastructure and the re-use of many components implies that developing training material can be a simple exercise for each VREVirtual Research Environment.; a training for one VREVirtual Research Environment. can be re-used in another. The training material for ICIS covers much on e.g. VTI can only be understood if one has practical knowledge of the ICIS VREVirtual Research Environment..

The training in iMarine as described here focuses on the end-users. More specific training to e.g. gCube developers, VREVirtual Research Environment. managers, and VREVirtual Research Environment. designers is already available. These are however beyond the scope of the EA-CoPCommunity of Practice. training

Training Log

| VREVirtual Research Environment. Name | VREVirtual Research Environment. on-line help | VREVirtual Research Environment. training manual | Last training activity | Detail report | No. of trainees |

| ICIS | Completed | In progress | 10.12 | Yann report | 8 |

| Biodiversity research environment | Completed | In progress | 11.13 | GP Coro report | 8 |

| Biodiversity research environment | Completed | In progress | 11.13 | GP. Coro report | 8 |

| VME-DB | Completed | In progress | 01.14 | A.Gentile report | 8 |

| SmartFish Mauritius | Completed | In progress | 01.14 | Y.Laurent / C.Baldassarre report | 8 |